ARE THE ROBOTS COMING?

It is mid-2030, and you arrive at work in a self-driving car. While you are getting on with the business of teaching young children, your AI assistant writes emails for you to approve and drafts your tax return. In your setting, children spend a portion of each day talking with and playing with smart toys, which can ‘hear’ and respond to them based on their interests and stage of development. You return home to find dinner prepped and your vacuuming done by a self-repairing service robot… If these predictions sound far-fetched, it’s worth noting all of these AI-based technologies are already here in some form.

There is widespread agreement that artificial intelligence (AI) will lead to a new industrial revolution in every sphere of human activity, including education. PWC estimates that up to one in three current jobs will be done by AI by the 2030s, and AI could increase global GDP by 14 per cent.

For some, AI is the ultimate threat to humanity. Others see this fear as similar to the outcries that took place with the advent of the calculator and the internet. The UK government has embraced AI, hosting a global summit on AI safety last year and in 2021 setting out a ten-year national strategy to ‘make the UK a global AI superpower’.

What experts are agreed upon is that children, who will be entering the workforce in less than two decades – need to be prepared – though not necessarily in ways we can completely foresee.

Anastasia Betts, education researcher and former teacher and founder of tech and learning think-tank Learnology Labs, talks of ‘a human-AI work partnership’. She says, ‘AI crunches trillions of data points and from processing that it is synthesising and deriving insights about what is important at any given moment. It’s like working with a novice teacher. It doesn’t know what it doesn’t know – all it does is scrape the internet for information and crunch it down to its essence. AI has access to all the things that are out there – the good, bad and ugly. There is bias baked into those responses because we as humans are biased.’

She adds, ‘What is the human intelligence that these children will need in 15 years to compete in a workforce demanding AI competence?’ Things we think of as related to the Characteristics of Effective Learning – problem solving, creative thinking, empathetic relationship building, emotional intelligence, collaboration – will be key.’

Samia Kazi, founder of several early years training companies in the Middle East and globally, writes that AI is ‘not good at ethical decision-making, making moral choices, or applying social emotional intelligence. These are skills teachers must nurture in children … they will be especially important as children begin to interface more with AI.’

AI INNOVATIONS

Smart toys

The idea of young children spending time playing with a screen-based AI toy which can ‘remember’ personal information will be discomfiting to many. One such, Hello Barbie, which had voice recognition (like Alexa) and connected to Wi-Fi, was pulled for privacy and hacking concerns in 2017.

A more up-to-date smart toy is Miko 3 – a robot which talks and remembers past conversations, so it can address the child by name and adjust its responses to the mood of the child. Miko has a touch-screen face which can be used to view content, such as animated audiobooks, games and TV shows, through trusted children’s content providers. A parent app is designed to monitor progress and screen time. The manufacturers say the robot doesn’t store any information.

John Siraj Blatchford, educational consultant, is one of many with reservations about whether ‘robots of this kind may be developed even further to offer a replacement for real life, in-person education and care’. But he also points out that personalised learning is a powerful incentive for developers to come up with AI tools that can target specific interests and unique stages of development, ‘providing every child with the kind of support that is currently only possible from a 1:1 tutor or a nanny’.

In a research paper on a pilot of personalised online maths programme My Math Academy for three- and four-year-olds at a school in Texas, Betts points out that ‘it remains incredibly challenging for a teacher to discern in a group of 20-30 children … the prior knowledge that each child brings to bear, what they are learning, how well they are mastering the targeted content’.

She found that ‘when provided with personalised instruction … even the youngest students are able to exceed conventional grade-level expectations’.

Tovah Klein, director at Barnard College Center for Toddler Development (see box, page 16), cautions that for very young children, ‘The problem with personalised learning is learning is not linear. It’s not first they learn a and then b. The brain takes in a tremendous amount of information – the routine of the day is more important learning than the letter of the day. And children have to feel safe and secure before they learn anything.’

Back-office functions

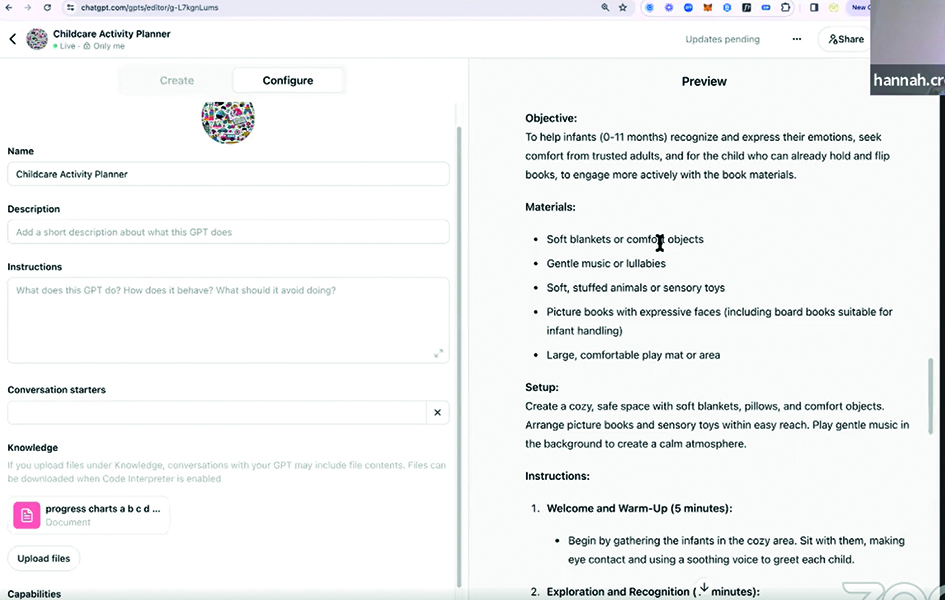

UK innovation company nesta has come up with four potential uses of AI in the early years – teaching, operations, family and content generation (AI has already been used, somewhat controversially, for writing a children’s book). These are focused on LLMs – large language models (see box) – which produce text. The company thinks this ‘could help to reduce some of the workload and provide creative support for practitioners.’

UK innovation company nesta has come up with four potential uses of AI in the early years – teaching, operations, family and content generation (AI has already been used, somewhat controversially, for writing a children’s book). These are focused on LLMs – large language models (see box) – which produce text. The company thinks this ‘could help to reduce some of the workload and provide creative support for practitioners.’

The company says these models can ‘produce lesson plans, generate activity ideas, design games or weave stories – all aligned to an individual child’s interests and developmental level’.

Laurie Smith, nesta’s head of foresight research, says the dangers with AI, such as confabulation or hallucination – producing plausible-sounding but incorrect information – make it unsuitable for use with children directly. He likens uses of AI to ‘getting from a blank sheet of paper to a first draft’, adding, ‘We thought it might be particularly relevant to early years education partly because there was lots of written material, and lots of generative AI uses written texts.’

For example, in one of the prototypes, a personalised activity generator, the Department for Education’s Development Mattersguidance for England has been used to ‘train’ the AI to reduce the potential for these ‘hallucinations’. The generator is aimed at allowing practitioners to pick specific or predetermined learning goals referenced in the guidance and enter specific themes for the activity to match children’s interests. The company points out that any teaching tools based on generative AI will require a competent ‘practitioner in the loop’ to evaluate the results.

One drawback of the tech, Smith says, is ‘explainability’, for unlike Google, AI doesn’t show you its sources. ‘You don’t know exactly what is going on inside the box. It’s trained on loads of data and comes up with an answer, but you don’t know how it came up with that.’

Kazi, an applied computing graduate, does not use child-facing AI but requires all directors in her childcare programmes to have a premium AI account – which allows the uploading of documents such as curriculum guidance – to help come up with templates for back-office tasks. This includes drafting job descriptions, emails and coming up with ideas for events. She says, ‘People think you need a lot of skills to use AI, but you need more IT skills to do a presentation or put together an Excel spreadsheet.’

Each training centre has an AI assistant – a chatbot – and practitioners are ‘encouraged to use them as a smart Google’; the skill is in interpreting the AI, Kazi says. ‘Sometimes it will give you bad results – but who said that is a bad thing? Like having a stupid idea in a meeting is not a bad thing, because then you know what you don’t want.’

She adds that this makes a level of child development expertise even more important. ‘You have to be able to say “that is not best practice” because you are analysing results which are sometimes very convincing – but wrong.’

PRIVACY CONCERNS

‘If you look at the fine print of these AI tools, they have the authority to have ownership of the information you are entering,’ Kazi adds. ‘If you upload personal information about a particular child, [you don’t know] who has got access to that. You wouldn’t publish a photo on Facebook that you don’t want an employer to see. Use AI in the same way.’ Confidential information such as the opening of a new childcare centre shouldn’t be given to AI because it will be assimilated into the AI ‘brain’ and could come up in responses to a competitor, for example, she adds.

‘If you look at the fine print of these AI tools, they have the authority to have ownership of the information you are entering,’ Kazi adds. ‘If you upload personal information about a particular child, [you don’t know] who has got access to that. You wouldn’t publish a photo on Facebook that you don’t want an employer to see. Use AI in the same way.’ Confidential information such as the opening of a new childcare centre shouldn’t be given to AI because it will be assimilated into the AI ‘brain’ and could come up in responses to a competitor, for example, she adds.

However, Betts, who also has significant privacy fears, adds the ‘trillions of learning trajectories being crunched and turning out insights’ when coupled with data on ethnicity, age, gender and language could help drive big advances in our understanding of how people learn. She says, ‘My learning scientist mind wants all of that data because I want to better understand how knowledge is acquired. Do we think the way we say kids acquire math skills is the way they acquire them? Does this skill really precede this skill, or are they better taught in tandem?’

In response to fears over some of the darker sides of AI, the Council of Europe is developing a legal instrument to regulate the use of AI in education in order to protect the human rights of teachers and students, and the first comprehensive AI regulation, the EU AI Act, was passed in March, which restricts certain forms of AI.

The UK is taking a ‘pro-innovation’ approach to regulation, using existing legal frameworks rather than any new legislation, and creating an AI safety institute’. The DfE and devolved governments’ education departments have published advice for the use of AI on their websites, but it is not early years specific.

FUTURE IMPLICATIONS

According to a Parliament review on the use of AI in education from January, some education experts have expressed concerns over whether AI will ultimately deskill teachers, and whether students will develop an over-reliance on it. This was echoed in UNESCO guidance from 2023, which says ‘while GenAI may be used to challenge and extend learners’ thinking, it should not be used to usurp human thinking’.

Kazi doesn’t think AI will be able to replace teachers. ‘That blood, sweat and tears you have to go through to learn the job, AI is never going to replace.’

Betts thinks all early years practitioners should be using AI ‘as a thought partner’, adding, ‘AI allows for greater metacognition – understanding how you learn, and examining your own thoughts and biases. The downside is, do we get lazy and complacent? Teachers are so overwhelmed – it’s like having a partner teacher that does all the idea generation and takes it off your plate.

‘But it is something we are going to have to grapple with because here it is, and if you are not using it, your colleague is using it.

‘I think we are going to have to do a lot of introspection: What does my work mean now with this? What am I uniquely bringing to the partnership?’

What is AI?

Artificial intelligence (AI) was a term coined in the 1950s and means machines which can ‘think’ or operate autonomously. Now it is in facial recognition tech, Alexa, chatbots and even spellcheck.

Generative AI: defined by the Alan Turing institute as an artificial intelligence system that generates text, images, audio, video or other media in response to user prompts.

Machine learning: a branch of artificial intelligence to do with using data and algorithms to imitate the way that humans learn, by gradually getting more accurate the more they are used.

Large language model: these are AI systems trained on a vast amount of text that can carry out language-related tasks such as generating text, answering questions and translation. ChatGPT and Google Bard are examples.

CASE STUDY: testing nesta’s prototypes

By David Wright, former owner of Paintpots nursery and, prior to this, systems programmer for IBM

In trying out these prototypes, produced by nesta, I started with an open mind ready to understand their functionality, potential use and benefits.

Personalised Activity Generator

This is based on Development Mattersand invites the user to select a target age group; an area of learning and learning goals within this. It then offers suggestions for activities to support achievement of the desired outcome.

This tool is aimed at adults looking for activity ideas. My main issue with it is the unthinking deployment of output from this tool. As educators, it is our knowledge and experience of our children and the warm, positive relationships we develop with them that underpins their development. The generation of some potential ideas to support children’s expressed needs and interests could be useful, but I would be uncomfortable with the notion of this as a replacement for professional care and teaching skills.

Early Years Parenting Chatbot

This is aimed at providing advice/guidance to parents, based on the NHS Start for Life website. Of the three prototypes, I think this one probably has the best potential. I found the answers to my test questions – ‘I need advice on back to sleep guidance’, ‘At what age should I stop feeding my baby?’, ‘How can I stop my child from biting others?’ – to be informative and supportive, with links to additional references. It could be a very helpful resource to parents and early years settings provided the content can be assured as accurate and correct – this is already noted as a concern on the website itself.

Conclusion

AI does provide benefits for administrative and rules-based tasks. It can provide quick and helpful access to relevant data sources. How applicable this is to day-to-day practice within the early years settings is questionable. I cannot see us replacing early years teachers with robots just yet.

- Nesta produced these prototypes as a one-off experiment. They are available online for anyone to use: https://tinyurl.com/43hu8dps

How can we protect children from AI?

By Tovah Klein, Barnard College Center for Toddler Development and author of Raising Resilience: How to Help Our Children Thrive in Times of Uncertainty

‘Child development is to do human interactions and relationships. AI is not about relationships. That is why this is so jarring,’ says Dr Klein.

‘I don’t think it has to interfere with [our] young children’s world. They don’t need an explanation of AI – for a three-year-old, it’s a bit beyond where they are – understanding what’s real and what’s make believe.

‘Children need access to a full 3D world, at the centre is human interaction. Ideally it’s a safe environment that has some connection to nature and materials they can work with through all their senses. That’s where genuine learning goes on.

‘There is nothing in child development that says the child needs to be with an adult 24/7. But if a child in all its waking hours is distracted, that’s a problem. It’s how we as adults don’t get so taken by AI that we remain present for our children?’

She adds there is a risk AI makes learning dull: ‘Often what happens is children ask a question and parents feel like they have to know everything to answer it. Let’s say your four-year-old says “the sun sets on the river, I think it sleeps in the river”. It’s OK for children to think that way.

‘Sometimes adults want to be like evangelists. They say “no, actually that’s not where the sun goes” – but if you allow children to sit with that, at some point they start to say “can we learn more?” That’s when you go to books or online equivalent for information.

‘But if you jump to the end [by using a search engine or AI], it is not very satisfying and you are depriving them of agency, the “aha” of discovery. That’s the building of the human. For real learning to happen, you want that falling down frustration. Not figuring it out is as important as figuring it out.’